|

|

|

|

| Captive: The first free NTFS read/write filesystem for GNU/Linux |

|

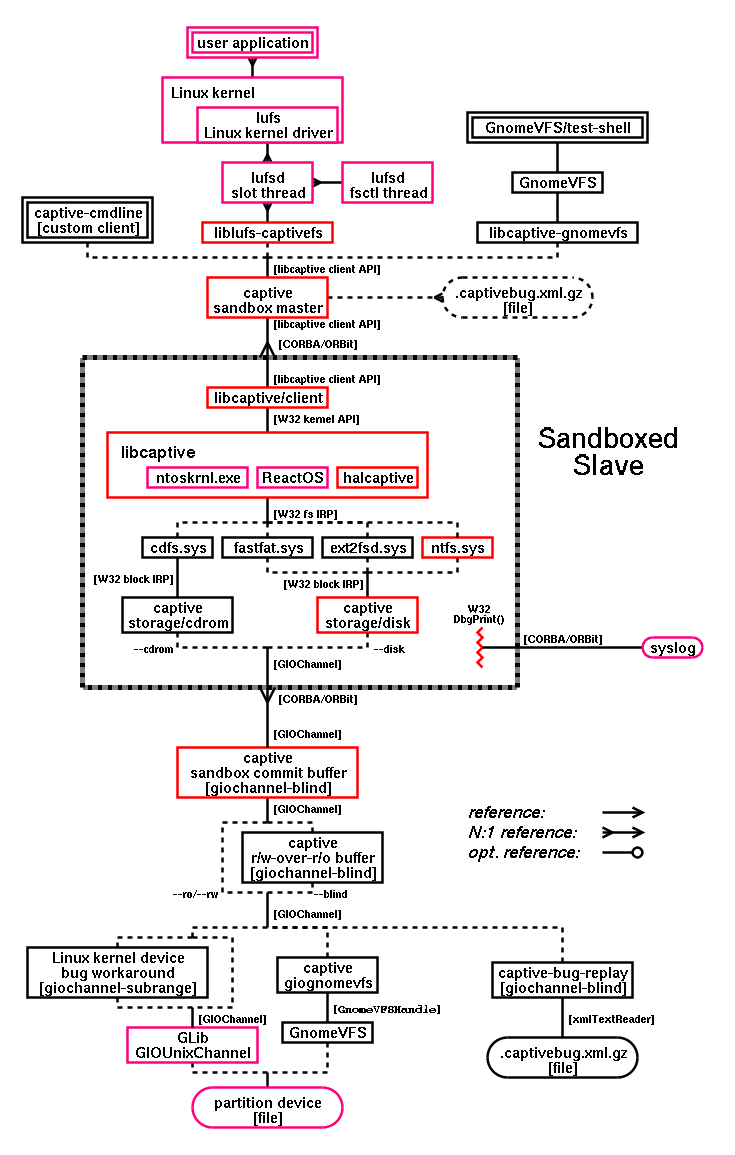

Most of the work of this project is located in the single box called "libcaptive" located in the center of the scheme. This component implements the core W32 kernel API by various methods described in this document. The "libcaptive" box cannot be further dissected as it is just an implementation of a set of API functions. It could be separated to several subsystems such as the Cache Manager, Memory Manager, Object Manager, Runtime Library, I/O Manager etc. but they have no interesting referencing structure.

As this project is in fact just a filesystem implementation every story must begin at the device file and end at the filesystem operations interface. The unified suppported interfaces are GLib (the most low level portability, data-types and utility library for Gnome) GIOChannel (for the device access) and the custom libcaptive filesystem API. Each of these ends can be connected either to some direct interface (such as the captive-cmdline client), Linux Userland File System (LUFS) or as a general Gnome-VFS filter. LUFS will be used in most cases as it offers standard filesystem interface by Linux kernel. You can also use Gnome-VFS as it offers nice filter interface on the UNIX user-privileges level for transparent operation with archives and network protocols. This filter interface was used by this project to turn the device reference such as /dev/hda3 or /dev/discs/disc0/part3 to the fully accessible filesystem (pretending being an "archive" in the device reference). This device access can be specified by Gnome-VFS URLs such as: file:///dev/hda3#captive-fastfat:/autoexec.bat

captive-bug-replay serves just for debugging purposes — you can 'replay' existing file.captivebug.xml.gz automatically being generated during W32 filesystem failure. This bugreport file will contain all the touched data blocks of the device used in the moment of the failure. captive-bug-replay will therefore emulate internal virtual writable device out of these bugreported data.

If the passed device reference is requested by the user to be accessed either in --ro (read-only) mode or in the --rw (full read-write) mode there are no further device layers needed. Just in the case of --blind mode another layer is involved to emulate read-write device on top of the real read-only device by the method of non-persistent memory buffering of all the possible write requests.

sandbox commit buffer is involved only in the case sandboxing feature is active. It will buffer any writes to the device during the sandbox run to prevent filesystem damage if the driver would fail in the meantime. If the filesystem gets finally successfully unmounted this sandbox buffer can be safely flushed to its underlying physical media. The buffer will be dropped in the case of filesystem failure, of course. The filesystem should be unmounted from time to time — it can be transparently unmounted and mounted by commit of captive-cmdline custom client. Currently you cannot force remounting when using LUFS interface client but it will be remounted after approx each 1MB data written automatically due to NTFS log file full. Now we need to transparently connect the device interface of GIOChannel type through CORBA/ORBit to the sandboxed slave.

Such device is still only a UNIX style GLib GIOChannel type at this point. As we need to supply it to the W32 filesystem driver we must convert it to the W32 I/O Device with its capability of handling IRP (I/O Request Packet; structure holding the request and result data for any W32 filesystem or W32 block device operation) requests from its upper W32 filesystem driver. Such W32 I/O Device can represent either CD-ROM or disk device type as different W32 filesystem drivers require different media types — currently only cdfs.sys requires CD-ROM type.

W32 media I/O Device is accessed from the W32 filesystem driver. The filesystem driver itself always creates volume object by IoCreateStreamFileObject() representing the underlying W32 media I/O Device as the object handled by the filesystem driver itself. All the client application filesystem requests must be first resolved at the filesystem structures level, passed to the volume stream object of the same filesystem and then finally passed to the W32 media I/O Device (already implemented by this project as an interface to GIOChannel noted above).

The filesystem driver is called by the core W32 kernel implementation of libcaptive in synchronous way in single-shot manner instead of the several reentrancies while waiting for the disk I/O completions as can be seen in the original Microsoft Windows NT. This single-shot synchronous behaviour is possible since all the needed resources (disk blocks etc.) can be always presented as instantly ready as their acquirement is solved by Host-OS outside of the W32 emulated Guest-OS environment. For several cases needed only by ntfs.sys there had to be supported asynchronous access — parallel execution is emulated by GLib g_idle_add_full() with g_main_context_iteration() called during KeWaitForSingleObject().

libcaptive offers the W32 kernel filesystem API to the upper layers. This is still not the API the common W32 applications are used to as they use W32 libraries which in turn pass the call to W32 kernel. For example CreateFileA() is being implemented by several libraries such as user32.dll as a relay interface for the kernel function IoCreateFile() implemented by this project's libcaptive W32 kernel emulation component.

As it would be very inconvenient to use the legacy, bloated and UNIX style unfriendly W32 kernel filesystem API this project offers its own custom filesystem API interface inspired by the Gnome-VFS client interface adapted to the specifics of W32 kernel API. This interface is supposed to be easily utilized by a custom application accessing the W32 filesystem driver.

CORBA/ORBit hits us again – we need to translate the custom filesystem API interface out of the sandboxed slave to the UNIX space.

captive sandbox master provides the functionality of covering any possible sandboxed slave restarts and its communication. It is also capable of demultiplexing single API operations to multiple its connected sandbox slaves in transparent way as each of them handles just one filesystem device.

The rest of the story is not much special for this project since this is a common UNIX problem how to offer user space implemented UNIX filesystem as a generic system filesystem (as those are usually implemented only as the components od UNIX kernel).

The filesystem service can be offered in several ways:

One possibility would be to write a custom client application for this project such as file manager or a shell. Although it would implement the most appropriate user interface to the set of functions offered by this project (and W32 filesystem API) it has the disadvantage of special client software. Appropriate client is provided by this project as: src/client/cmdline/cmdline-captive

The most usable interface is the LUFS client by liblufs-captivefs. As LUFS already assigns separate process for each filesystem mount the demultiplexing feature is not utilized in this case.

LUFS needs multiple operating threads (each UNIX kernel operation needs one free lufsd slot/thread to not to fail immediately). As libcaptive is single-threaded all the operations get always synchronized by liblufs-captivefs before their pass over to libcaptive.

This client allowing its filesystem access even without any involvement of UNIX kernel from any Gnome-VFS aware client application (such as gnome-vfs/tests/test-shell). This Gnome-VFS interface connects the data flow of this project in two points — both as the lowest layer device image source and also as the upper layer for the filesystem operation requests.

Unimplemented and deprecated methods for providing filesystem service:

The real UNIX OS filesystem implementation must be completely implemented inside the hosting OS kernel. This requires special coding methods with limited availability of coding features and libraries. Also it would give the full system control to the untrusted W32 filesystem driver code with possibly fatal consequences of yet unhandled W32 emulation code paths. It would benefit from the best execution performance but this solution was never considered a real possibility.

The common approach of filesystem implementations outside UNIX OS kernel were custom NFS servers usually running on the same machine as the NFS-connected client as such NFS server is usually an ordinary UNIX user space process. It would be possible to implement this project as a custom NFS server but the NFS protocol itself has a lot of fundamental flaws and complicated code for backward compatibility.

EOF